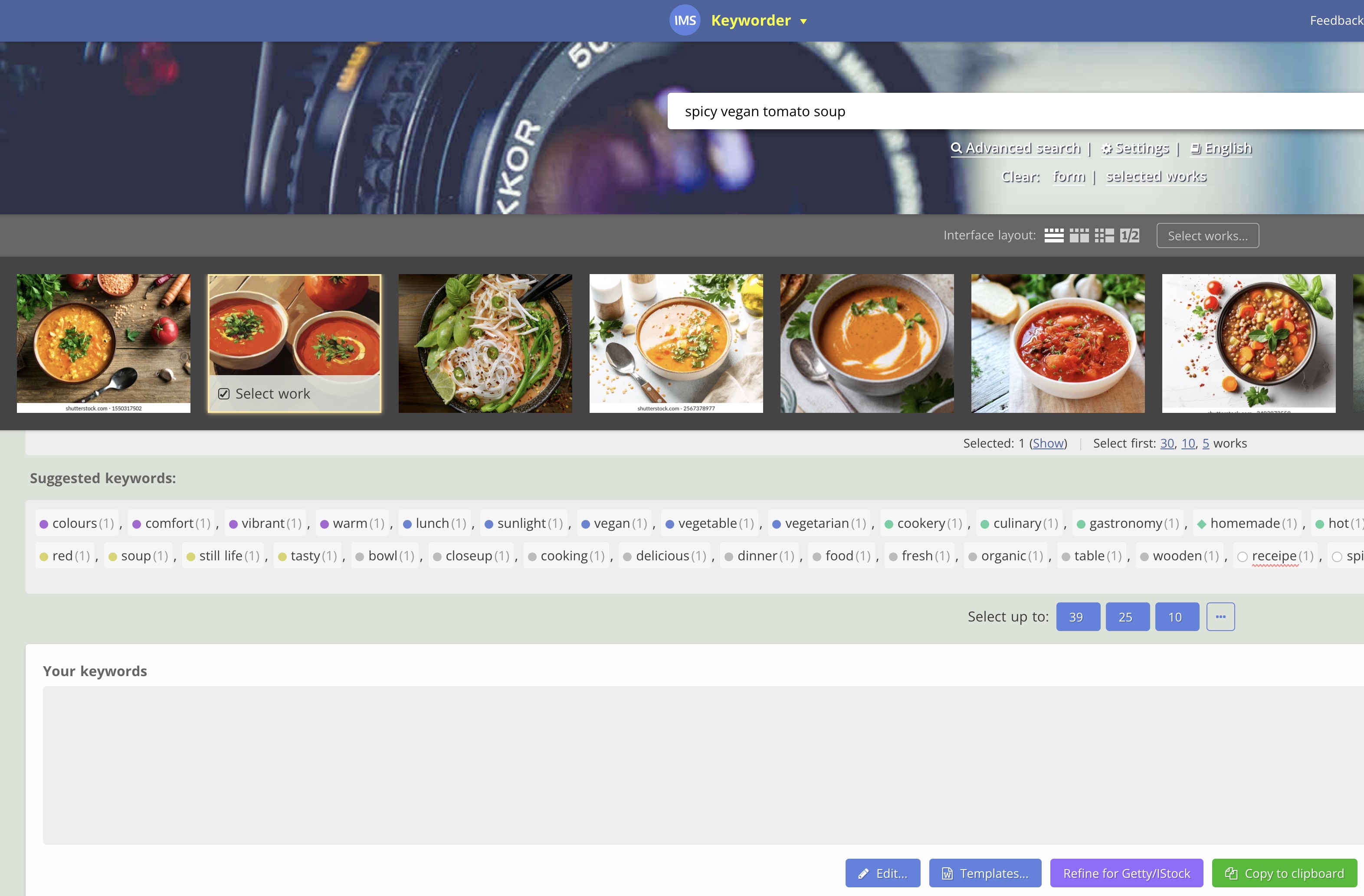

Recently, I started using large language models (LLMs) to help with image keywording, especially when my usual tool, IMStocker Keyworder, didn't give me the results I was hoping for.

Here’s an example of a prompt I’ve been using:

1Generate 50 comma-separated keywords for a photo of a bowl of soup described as "spicy vegan tomato soup is made creamy with coconut milk and flavoured with curry spices, like cumin, fresh ginger, and turmeric".

IMS Keyworder uses a visual process: you pick a few sample photos that look similar to yours, and it generates keywords based on those. It's a great tool, but it doesn’t use AI or understand your images beyond helping you find matching visuals.

That’s where LLMs now shine, they can process both text and images, enabling much richer metadata generation. If your prompt is detailed enough, LLMs can produce highly accurate results. Better yet, if you attach a photo as part of the input, the model can generate the full set of metadata: title, caption, and keywords. I haven’t tested this extensively yet, but I suspect that if you include GPS coordinates in your prompt, the model might even identify the landmark in your photo.

Automating Metadata with LLMs #

This kind of manual prompt-based workflow works well if you're only dealing with a handful of images. But wouldn’t it be great if you could automate it in Lightroom? Imagine generating a smaller version of your photo, sending it to an LLM via API, and getting structured metadata back—automatically.

That was the goal I set out to achieve.

I explored various LLM APIs and ultimately chose Google Cloud Vertex AI. It's cost-effective (pay-as-you-go) and has a generous free tier. You can craft and test prompts using Vertex AI Studio. Once you're happy with the output, it generates a code snippet you can drop into your own application. I tested both Python and curl (via Postman), and everything worked well.

Creating a Lightroom Plugin #

The next step was building a Lightroom plugin to tie it all together. I’ve worked with APIs throughout my career, but Lightroom plugin development was new territory. Lightroom plugins are written in Lua, a lightweight, high-level scripting language used mainly for embedded use in applications and games. Adobe provides an SDK consisting of Lua scripts that let you extend Lightroom’s functionality.

I installed Lua and began replicating the Python functionality in Lua using standard libraries. For HTTP requests, I used the LuaSocket library, and for parsing JSON responses, the dkjson library. Along the way, I discovered LuaRocks, a package manager for Lua (similar to pip for Python or npm for JavaScript), which made installing libraries much easier.

Here’s the original script I wrote: https://gist.github.com/melastmohican/383c3f92029eec269b72b611011fb83c

Once that worked, I adapted it into a real Lightroom plugin. I used this project as a starting point: lightroom-alt-text-plugin. Since both used the same JSON library, I didn’t need to change much of that part, but I had to adapt the HTTP request part to use the Lightroom SDK functions.

The final result is now available on GitHub: lrc-ai-describe

I’m currently testing it with my own image collection, fixing bugs, and continuously improving the code.